The intersection of radiomics, artificial intelligence and radiation therapy

Images

Defined as a method that extracts mineable data from radiographic medical images, radiomics can potentially provide information that an oncologist and/or medical physicist may not detect with the human eye alone.

As Gillies et al put it, “Radiomics are more than pictures, they are data.1 With the increasing number of data recognition tools and the emergence of machine learning (ML) and deep learning (DL), the ability to extract information beyond visual interpretation has become a significant trend. An integral component of radiomics is the integration of ML and DL algorithms.

“We are at a watershed moment, moving away from handcrafted features to understand and develop imaging biomarkers for evaluating a patient’s response to radiation therapy, to a black box approach with deep learning taking over that task,” says Raymond H. Mak, MD, a thoracic radiation oncologist and associate professor of radiation oncology at Harvard Medical School, Brigham and Women’s Hospital, and Dana-Farber Cancer Institute in Boston.

Dr. Mak and Hugo Aerts, PhD, director of the Artificial Intelligence in Medicine (AIM) Program at Harvard-Brigham and Women’s Hospital, are helping to lead the development of DL and radiomic technologies applied to medical imaging data. Recognizing a need for a standardized extraction engine in radiomics, AIM has developed pyradiomics, an open source platform for reproducible radiomic feature extraction. Supported in part by a US National Cancer Institute grant, Pyradiomics is based on open source tools and platforms developed by big tech companies such as Google, Facebook and others.2

Toward Intelligence and a Multimodality Approach

At Duke University, Kyle Lafata, PhD, a postdoctoral associate in radiation oncology and the program director for AI Imaging, Woo Center for Big Data and Precision Health, is working toward developing and translating quantitative image analysis techniques and digital biomarkers into actionable intelligence that can be used in clinical practice, specifically radiation oncology.

Using radiomics, features and information in medical images are extracted, including morphology (the 3-dimensional size and shape of the object), intensity distribution of the signal with an image, the texture or the relationship between voxels in an image, and the interaction of those voxels spatially.

“Images are unstructured data,” Dr. Lafata explains, “so the process is to transcribe them into structured datasets that can be combined with other information to use in diagnosis or prognosis.”

He adds that imaging data such as standard uptake value (SUVmax) are radiomic features; combining imaging and clinical data provides a more powerful prognostic effect than using them individually. ML/DL helps correlate the data so it can be used in clinical practice.

“One domain knowledge isn’t enough—by combining integrative ‘omics’ we can learn more complex information,” Dr. Lafata says. His group has been looking at pathomics, the concept of extracting the same or similar features from digital pathology slides as they are extracting with radiomics. “By extracting both radiomics and pathomics data, we can start to see the appearance and behavior of disease across different spatial and functional domains.”

For example, by taking the anatomic data from a computed tomography (CT) scan on a tumor mass with metabolic data on the F-18 fluorodeoxyglucose (FDG) uptake in a positron emission tomography scan and then combining that with features extracted from the pathology (the biopsy), Dr. Lafata can learn more about metabolism of the tumor, the structure of the tumor and the microscopic disease.

“Now, we have 3 levels of information that will tell us different things about that tumor,” he adds. “Depending on the machine learning model we intend to develop, we can use that data to make a diagnosis, guide treatment or determine therapeutic response.”

Dr. Lafata says a multimodality approach must extend beyond one discipline or domain, such as radiology, pathology, genetics and health information, including the electronic medical record. By combining these disciplines, the clinician may uncover enough information to define the patient phenotype diagnostically and therapeutically.

Standardization and Other Challenges

Data access and sharing are central to the collaborations among researchers and institutions. One way to expedite this process while minimizing data privacy issues is through a distributed learning environment in which models move to different institutions rather than requiring data to be in a central location, says Mattea Welch, a PhD candidate at the University of Toronto, who has co-authored several articles on radiomics as part of her doctoral studies and thesis. She has collaborated with Dr. Aerts and Ander Dekker, PhD, professor of clinical data science, MAASTRO Clinic, Maastricht University, The Netherlands.

“We are generating mass amounts of imaging data in the clinic every day, and there is potential to leverage that data, but we need to better understand what is driving the predictive and prognostic capabilities of the quantified imaging features,” Dr. Welch says. “There is a need for standardized methods and collaboration across disciplines and institutes.”

The intersection of computer science and medicine is not only an area of discovery, it is also where safeguards, standardization, and collaboration are needed to ensure reproducibility. This need led Dr. Welch and co-authors to highlight the vulnerabilities in the radiomic signature development process and propose safeguards to refine methodologies to ensure the development of radiomic signatures using objective, independent and informative features. These safeguards include using open source software, such as pyradiomics; testing models and features for prognostic and predictive accuracy against standard clinical features; testing feature multicollinearity using a training dataset during model development; testing underlying dependencies of features using statistical analysis or by perturbing data; ensuring image quality by preprocessing data to avoid erroneous features such as metal artifacts; and including manual contouring protocols to describe prevalent imaging signals used for delineation.3

“The main take-home message is that collaboration between researchers and clinicians is needed to ensure understanding of the nuances of clinical data and methods being used for radiomics,” Dr. Welch adds.

Variations in systems, software and reconstruction algorithms across manufacturers and the impact on data reproducibility and prognostic capabilities is an area of concern and active research. One position, notes Dr. Welch, is that if extracted features are not stable across different systems and data perturbations, then perhaps they are not prognostic or predictive.

It also comes down to imaging systems not being engineered for intended radiomic applications. “Now, we are treating images as data. It’s a reverse engineering process. Since we are now using these systems in nontraditional ways, we need them to be more quantitatively sound. For example, if we want to use radiomics to differentiate a benign vs a malignant tumor, then we need to make sure the features capture the underlying phenotype of the disease, and not the underlying noise distribution of the imaging system.”

Additionally, different postprocessing techniques such as filter-back projection and iterative reconstruction in CT imaging can impact image quality and, therefore, the radiomic data.

“A common problem in medicine is the issue of small sample sizes,” Dr. Lafata adds. “Even if we have a large data set, we need that feature data to match with the outcome to build a model. A problem across the board has been the ability to gather that robust information on each patient irrespective of having a large amount of input data for a machine learning algorithm.”

Even digital biomarkers are limited by source variation and unstable data, he says. A lot of work remains to harmonize and unify data and knowledge across domains such as genomics and radiomics.

“We need to understand the intricacies of the data and the learning algorithm,” Dr. Lafata says. “The uncertainty of machine learning models is not as straightforward as conventional statistical propagation of error. It comes down to the complex relationship between the data that is being measured and the response of a learning algorithm to that data.”

Human Factors and Potential

“Traditional radiomics require a human for analysis,” Dr. Mak says. To analyze a tumor, for example, the oncologist would manually segment it. This could present certain human biases into the data, based on the clinician’s use of libraries of features and their understanding of the tumor biology. When a DL model is trained, it can learn from the underlying data without the need for initial human interpretation.

“We think that with DL we can minimize human biases. However, the chief concern is whether that DL-derived data is interpretable and what is that DL algorithm learning from?” says Dr. Mak. “Is that DL performing according to task?”

Yet, despite such concerns, Dr. Mak believes radiation oncology will continue the pursuit of applying DL-based tools to radiomics. DL and radiomics will have a role in 3 primary areas: 1) aiding diagnosis; 2) predicting treatment response and patient outcome; and 3) augmenting humans in manual tasks, such as segmentation and radiation therapy planning.

The development of automated planning has already begun. Dr. Mak was lead author on a paper describing how a crowd innovation challenge was used to spur development of AI-based auto segmentation solutions for radiation therapy planning that replicated the skills of a highly trained physician.4

“The potential of this type of technology to save time and costs and also increase accuracy is significant, particularly for areas of the world where this type of skill and specialty may not be available or is understaffed,” says Dr. Aerts.

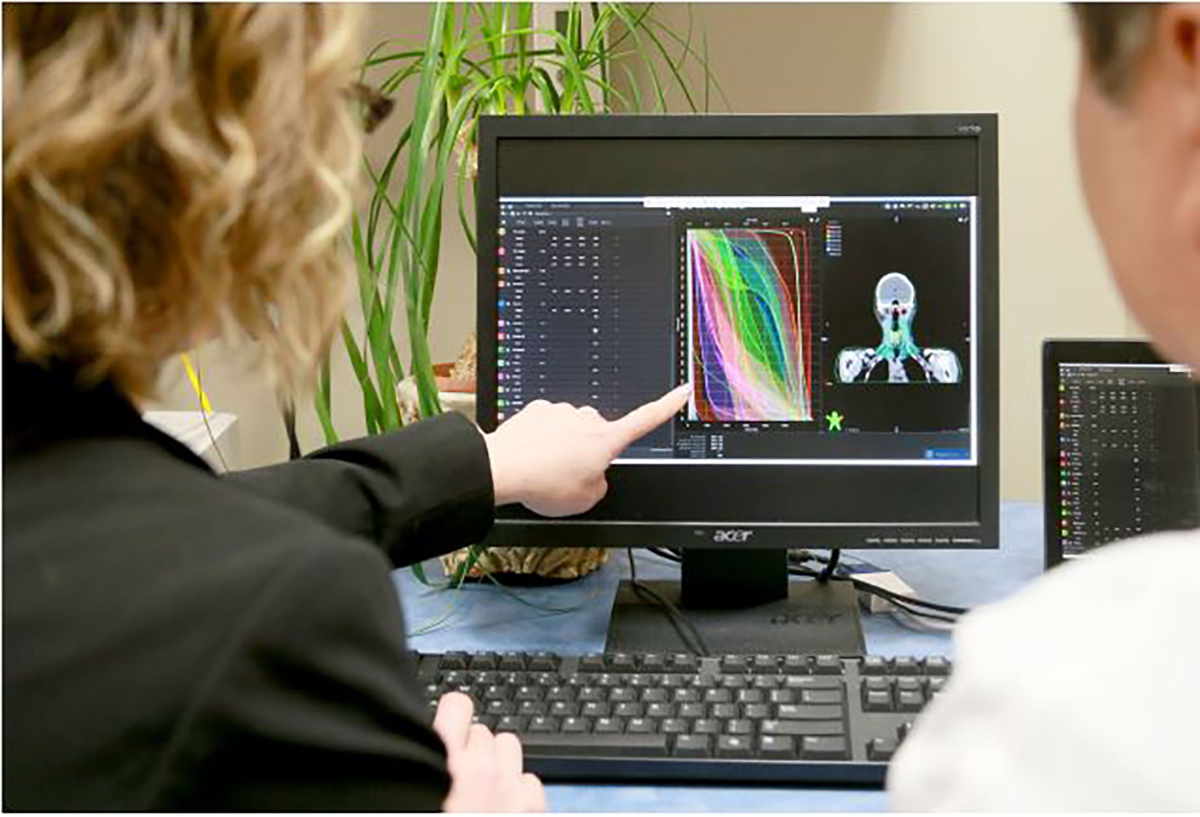

According to Dr. Lafata, their lab at Duke Radiation Oncology pioneered mathematical techniques to optimize treatment planning and help determine the best plan for improving dosimetric constraints. This early work inspired Varian (Palo Alto, California) to commercialize the methodology as the RapidPlan knowledge-based treatment planning solution (Figure 1).

“Solutions such as RapidPlan that have a human-machine interface are lower risk than one that doesn’t have the human element,” Dr. Lafata says.

Beyond what is commercially available, Drs. Aerts and Mak see potential for radiomics and AI/DL to aid treatment planning and the selection of the optimal plan as well as monitor patient response.

“Deep learning and radiomics will move beyond the traditional realm of predicting response and be applied to other aspects, such as identifying the patient’s biological phenotype and serial assessment of tumor evolution in response to treatment,” Dr. Mak adds.

Dr. Welch notes that some researchers are also seeking to predict toxicity using the same pattern recognition techniques as radiomics. “By quantifying the dose in different organs at risk using radiation therapy plans, we can predict toxicity or different outcomes such as loco-regional failure,” she says.

Delta radiomics is another area of research in which changes in the features are calculated using pre- and post-treatment images. Then, Dr. Welch explains, those delta-radiomic features can be tested to determine whether they correlate with different patient outcomes.

“From the image capture to the treatment planning to dose delivery, [artificial intelliegence (AI)] is revolutionizing the field of radiation therapy. AI will impact outcome prediction and enable better monitoring response,” Dr. Aerts says.

However, he cautions that human validation should remain an important aspect of any AI or radiomics-based solution to ensure high quality. The pursuit of radiomics should involve using AI to achieve a good solution quickly and still be reviewed and approved by humans.

Several organizations are building large plan libraries and aggregate DL-based models on different data sets, Dr. Mak adds. However, the underlying concern remains that the data may not be entirely reliable, and the quality may not be the same across different data sets.

“Context is key for these radiomic and DL applications,” Dr. Mak says. “There can be interpretability problems or data quality issues. The key aspect for deep learning is to have a large and well-curated data set to train the model but also context-dependent expertise to develop and ensure the appropriate clinical application.”

The bottom line, says Dr. Lafata, is that while radiomics and DL are on opposite ends of the spectrum—with radiomics being a hand-crafted human approach and DL being a computerized approach—the two are complementary techniques that will enable the field of radiation therapy to interpret images and data beyond human capability and intuition.

References

- Gilles RJ, Kinahan PE, Hricak H. Radiomics: images are more than pictures, they are data. Radiology. 2016;278(2):563-577.

- van Griethuysen JJM, Fedorov A, Parmar C, et al. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017;77(21):e104-e107.

- Welch ML, McIntosh C, Haibe-Kains B, et al. Vulnerabilities of radiomic signature development: the need for safeguards. Radiother Oncol. 2019;130:2-9.

- Mak RH, Endres MG, Paik JH, et al. Use of crowd innovation to develop an artificial intelligence-based solution for radiation therapy targeting. JAMA Oncol. 2019;5(5):654-661.

Citation

MB M. The intersection of radiomics, artificial intelligence and radiation therapy. Appl Radiat Oncol. 2019;(4):34-37.

December 28, 2019