RTAnswers online patient education materials deviate from recommended reading levels

Images

Abstract

Objective: Patients are turning to the internet more often for cancer-related information. Oncology organizations need to ensure that appropriately written information is available for patients online. The aim of this study was to determine whether the readability of radiation oncology online patient information (OPI) provided by RTAnswers (RTAnswers.org, created by the American Society for Radiation Oncology [ASTRO]) is written at a sixth-grade level as recommended by the National Institutes of Health (NIH), the U.S. Department of Health and Human Services (HHS), and the American Medical Association (AMA).

Methods: RTanswers.org was accessed and online patient-oriented brochures for 13 specific disease sites were analyzed. Readability of OPI from RTAnswers was assessed using 10 common readability tests: New Dale-Chall Test, Flesch Reading Ease Score, Coleman-Liau Index, Flesch-Kinkaid Grade Level, FORCAST test, Fry Score, Simple Measure of Gobbledygook, Gunning Frequency of Gobbledygook, New Fog Count, and Raygor Readability Estimate.

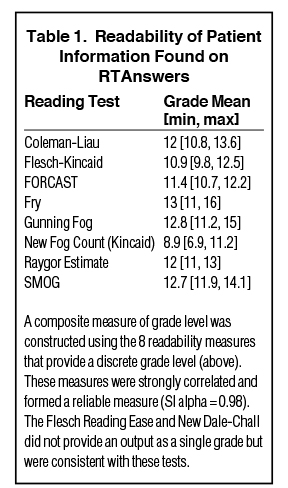

Results: A composite grade level of readability was constructed using the 8 readability measures that provide a single grade-level output. The grade levels computed by each of these 8 tests were highly correlated (SI alpha = 0.98). The composite grade level for these disease site-specific brochures was 11.6 ± 0.83, corresponding to a senior in high school, significantly higher than the target sixth-grade level (p < 0.05) recommended by the NIH, HHS, and AMA.

Conclusion: Patient educational material provided by RTAnswers.org is written significantly above the target reading level. Simplifying and rewording this information could improve patients’ understanding of radiation therapy and improve treatment adherence and outcomes.

Communication and education are imperative to the physician-patient relationship. The internet is a convenient source of information for patients, and nearly two-thirds of cancer patients seek information about their diagnosis online.1-3 Cancer patients often find information about treatments and the value of receiving a second opinion, and obtain support through the internet.3,4 Modern radiation therapy is highly personalized based on a complex interplay between patient characteristics, tumor characteristics, and previous treatments. Compared with other cancer treatments, radiation therapy is disproportionally associated with misconceptions and misunderstanding.5 Patients often leave a consultation trying to make sense of this information deluge and turn to the internet for answers.6 This use of online information can allow patients to more actively participate in their treatment.7 However, it is imperative that the information be presented in a way that can be accurately comprehended by most patients. This is especially important if patients first seek information online before going to see a physician, as is increasingly becoming the case.8

Most of our knowledge regarding the literacy of Americans comes from the U.S. Department of Education’s literacy surveys, conducted in 1982, 1992 and 2003. Most recently, the 2003 National Assessment of Adult Literacy (NAAL) assessed prose, document, and quantitative literacy in a representative sample of 19 000 adults (age 16 and older, including 1200 prisoners) from across the nation. This survey was the first to incorporate a component on health literacy, defined as “the ability of U.S. adults to use printed and written health-related information to function in society, to achieve one’s goals, and to develop one’s knowledge and potential.” The NAAL demonstrated that 43% of American adults have basic or below basic literacy skills.9 Regarding health literacy specifically, over one-third of American adults have health literacy at or below the basic level,10 and only 12% have proficient health literacy.11 Health literacy is a strong predictor of the health status of an individual,12 and those with poor health literacy demonstrate worse compliance with treatment recommendations and worse outcomes.12,13 Based on the U.S. literacy rate, the NIH, HHS, and AMA recommend that OPI be written at a sixth-grade level.14 These reported grade levels are derived from readability formulas, but do not necessarily indicate that an adult with a specific level of education will be able to read the text. Further, patients typically have a reading ability that is about 5 grades lower than the highest attained educational grade.15,16 To make informed healthcare decisions, patients must have access to both accurate and understandable information.

Our team and others have demonstrated that online patient educational materials from academic radiation oncology websites, National Cancer Institute (NCI)-designated cancer websites, and cancer websites are significantly more complex than recommended.17-20 This work has resulted in our cancer center revising online patient information. In this brief report, we hypothesized that radiation oncology patient information found on RTAnswers (RTAnswers.org, created by the American Society for Radiation Oncology [ASTRO]) is written at the recommended sixth-grade level and assessed the readability of this text using a panel of validated readability tests.

Methods and Materials

RTAnswers.org was accessed in May 2016, and OPI in the form of patient-oriented, disease-specific brochures for 13 disease sites was analyzed. Readability analysis was performed with Readability Studio version 2012.0 (Oleander Software, Hadapsar, India). Ten commonly used readability tests were employed: New Dale-Chall Test, Flesch Reading Ease Score, Coleman-Liau Index, Flesch-Kinkaid Grade Level, FORCAST test, Fry Score, Simple Measure of Gobbledygook (SMOG), Gunning Frequency of Gobbledygook (Gunning FOG), New Fog Count, and Raygor Readability Estimate. These tests are well validated and commonly used to assess readability.21 The definition of a reading level is made on the basis of completed school years in the American school system. A composite score was constructed using the 8 tests that provide a single grade-level output (all tests except New Dale-Chall and Flesch Reading Ease Score).

Statistical analyses were performed using SPSS (IBM Corporation, Armonk, New York). All measures were compared to a sixth-grade reading level by t-tests, as this is the grade level recommended by NIH, DHHS, and AMA.22

Results

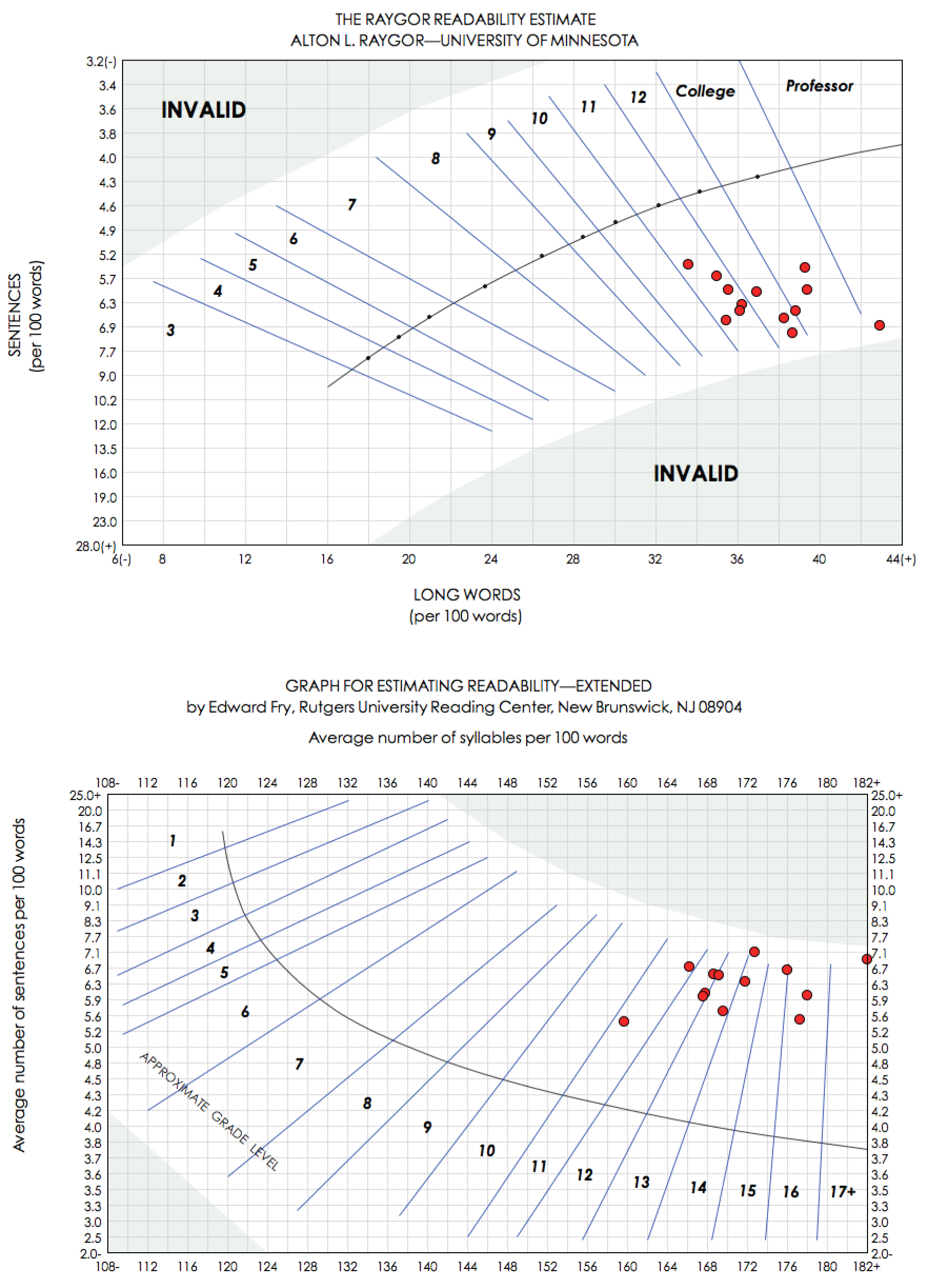

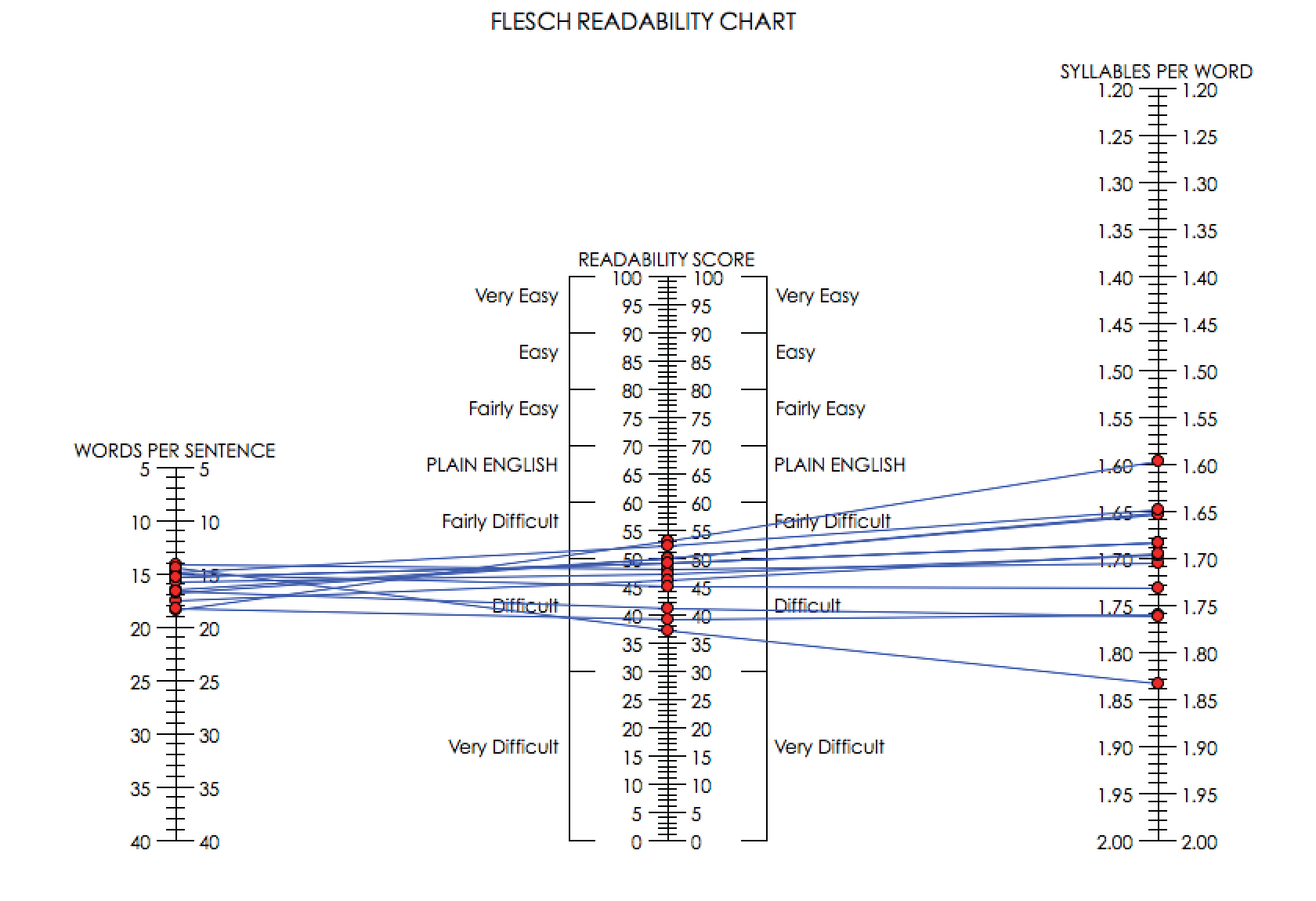

We analyzed the readability of 13 patient-oriented disease-specific brochures from RTAnswers. On all readability tests utilized, the readability of OPI from RTAnswers was significantly above target levels. The Raygor test, which analyzes both sentence length and the number of long words to derive a score, demonstrated a mean grade level of 12 (Figure 1A). Similarly, the Fry score, which incorporates sentence length and number of syllables per word, demonstrated a mean grade level of 13 (Figure 1B). The Flesch scale generates a score from 0-100 (with 100 being the easiest level of readability and 60-70 corresponding to “plain English”). This test focuses on words per sentence and syllables per word and is commonly used by U.S. government agencies to assess readability.23 The average score on the Flesch Reading Ease Scale was 47 (range 16-53) (Figure 2).

We then constructed a composite grade level of readability of the 13 RTAnswers brochures using the 8 readability measures that provide a single grade-level output. The readability of patient brochures for all disease sites are far from the target level as determined by these readability tests (Table 1). Furthermore, the grade level computed by each of these 8 tests was highly correlated (SI alpha = 0.98). When combined, the tests yielded a composite grade level of 11.6 ± 0.8, corresponding to a senior in high school, and significantly greater than the recommended sixth-grade level (p < 0.05).

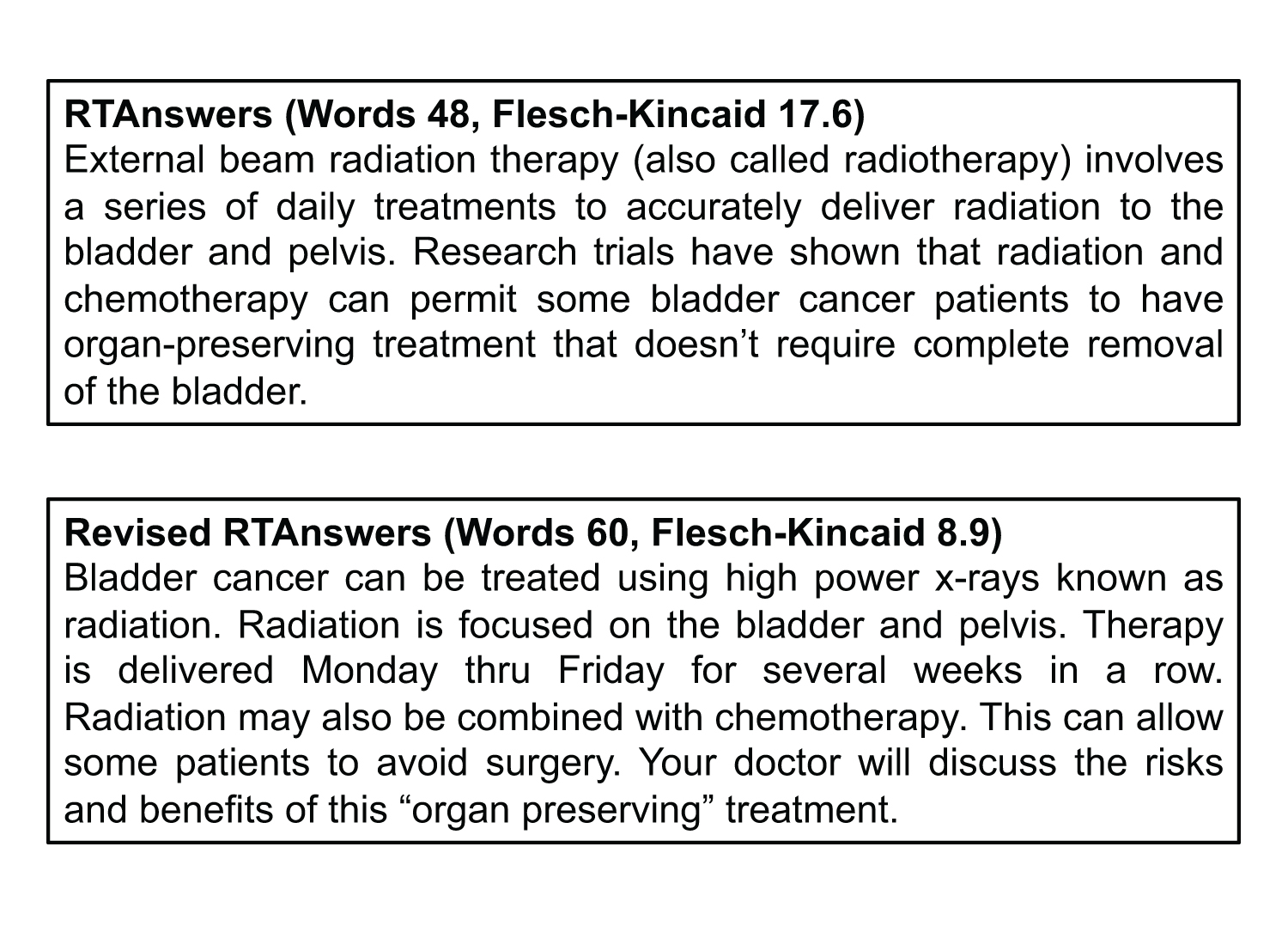

Next, we demonstrate one example of a sentence from a bladder cancer brochure from RTAnswers that we have edited to improve the readability (Figure 3). This correction decreased the Flesch-Kincaid reading level from 17.6 to 8.9. We conclude that similar alterations can be made to all RTAnswers brochures and other OPI to improve readability.

Discussion

Cancer patients commonly use the internet to seek information about their diagnosis and treatment.6 One major barrier to effectively understanding and utilizing this online information is the readability of the material. Our results indicate that online patient educational materials on RTAnswers are significantly more complex than the recommended sixth-grade level. This calculated grade level is similar to previous analyses of academic radiation oncology and NCI-designated cancer center websites.17-19 Furthermore, our findings are consistent with the readability of other online materials (eg, WebMD, NIH, Mayo Clinic) pertaining to the most common internal medicine diagnoses.24 This study builds on the work the work from Byun and Golden, who utilized the Flesch-Kincaid test to assess written ASTRO materials.25 However, our analysis goes a step further by extracting and analyzing information from patient brochures by each disease site found online.

To improve patients’ comprehension of radiation therapy and its role in their treatment, our analysis suggests that the language used in online patient information can be simplified to improve communication. Simple, easily understood language can reduce patient stress and anxiety, and improve the physician-patient relationship.26,27 To accomplish this, OPI provided by RTAnswers and academic cancer centers should use simple or well-known terminology, avoid medical or technical terms, use simple phrase and sentence structure, and incorporate feedback from nonmedical personnel into developing these brochures. An example of simplifying language is shown in Figure 3. This shows a sentence extracted from one of the brochures on bladder cancer and our interpretation to improve it. Similar techniques can be extrapolated to other OPI.

Improving readability of OPI can have a myriad of positive outcomes. Patients typically assess website accuracy based on its endorsement by a government agency or professional organization, their own perception of the website’s reliability, and their ability to understand the information presented.28,29 Improving readability increases the likelihood that patients will follow recommendations. Furthermore, improving readability of OPI is also important for effective communication, a potential barrier to help overcome healthcare disparities.

Conclusion

The composite grade-level readability of OPI collected from RTAnswers was 11.6, corresponding to the senior year of high school. This was significantly greater than the target sixth-grade level. These differences may prevent understanding of OPI by the general public. The readability of OPI provided by RTAnswers can be improved to enhance patient understanding and improve outcomes.

References

- Nelson DE, Kreps GL, Hesse BW, et al. The Health Information National Trends Survey (HINTS): development, design, and dissemination. J Health Commun. 2004;9(5):443-460; discussion 481-444.

- Fox S, Rainie L. Vital decisions: how Internet users decide what information to trust when they or their loved ones are sick. Washington DC: The Pew Internet & American Life Project. Vol. 2016; 2002.

- Shuyler KS, Knight KM. What are patients seeking when they turn to the Internet? Qualitative content analysis of questions asked by visitors to an orthopaedics Web site. J Med Internet Res. 2003;5(4):e24.

- Ziebland S, Chapple A, Dumelow C, et al. How the internet affects patients’ experience of cancer: a qualitative study. BMJ. 2004;328(7439):564.

- Hinds G, Moyer A. Support as experienced by patients with cancer during radiotherapy treatments. J Adv Nurs. 1997;26(2):371-379.

- Eysenbach G. The impact of the Internet on cancer outcomes. CA Cancer J Clin. 2003;53(6):356-371.

- Eysenbach G, Jadad AR. Evidence-based patient choice and consumer health informatics in the Internet age. J Med Internet Res. 2001;3(2):E19.

- Hesse BW, Nelson DE, Kreps GL, et al. Trust and sources of health information: the impact of the Internet and its implications for health care providers: findings from the first Health Information National Trends Survey. Arch Intern Med. 2005;165(22):2618-2624.

- Kutner M, Greenberg E, Jin Y, et al. Literacy in everyday life: results from the 2003 National Assessment of Adult Literacy; 2007.

- White S. Assessing the nation’s health literacy: key concepts and findings of the National Assessment of Adult Literacy (NAAL): American Medical Association; 2008.

- Cutilli CC, Bennett IM. Understanding the health literacy of America: results of the National Assessment of Adult Literacy. Orthop Nurs. 2009;28(1):27-32; quiz 33-24.

- Baker DW, Parker RM, Williams MV, et al. The relationship of patient reading ability to self-reported health and use of health services. Am J Public Health. 1997;87(6):1027-1030.

- Friedland R. Understanding health literacy: new estimates of the cost of inadequate health literacy. In: Congress of the U.S., ed, The Price We Pay for Illiteracy, Washington, DC: National Academy on an Aging Society; 1998;57-91.

- Weiss BD. Manual for clinicians. Health literacy and patient safety: Help patients understand. Chicago: American Medical Association Foundation; 2007.

- Jackson RH, Davis TC, Bairnsfather LE, et al. Patient reading ability: an overlooked problem in health care. South Med J. 1991;84(10):1172-1175.

- Ley P, Florio T. The use of readability formulas in health care. Psychol Health Med. 1996;1:7-28.

- Rosenberg SA, Francis DM, Hullet CR, et al. Online patient information from radiation oncology departments is too complex for the general population. Pract Radiat Oncol. 2016;7(1):57-62.

- Prabhu AV, Hansberry DR, Agarwal N. Radiation oncology and online patient education materials: deviating from NIH and AMA recommendations. Int J Radiat Oncol Biol Phys. 2016;96(3):521-528.

- Rosenberg SA, Francis D, Hullett CR, et al. Readability of online patient educational resources found on NCI-designated cancer center web sites. J Natl Compr Canc Netw. 2016;14(6):735-740.

- Keinki C, Zowalla R, Wiesner M, et al. Understandability of patient information booklets for patients with cancer. J Cancer Educ. 2016.

- Friedman DB, Hoffman-Goetz L. A systematic review of readability and comprehension instruments used for print and web-based cancer information. Health Educ Behav. 2006;33(3):352-373.

- Assessing readability. Vol. 2016: Washington, DC: Dept of Health and Human Services; 2014.

- Flesch R. A new readability yardstick. J Appl Psychol. 1948;32(3):221-233.

- Hutchinson N, Baird GL, Garg M. Examining the reading level of internet medical information for common internal medicine diagnoses. Am J Med. 2016;129(6):637-639.

- Byun J, Golden DW. Readability of patient education materials from professional societies in radiation oncology: Are we meeting the national standard? Int J Radiat Oncol Biol Phys. 2015;91(5):1108-1109.

- Ha JF, Longnecker N. Doctor-patient communication: a review. Ochsner J. 2010;10(1):38-43.

- Dorr Goold S, Lipkin M. The doctor-patient relationship: challenges, opportunities, and strategies. J Gen Intern Med. 1999;14 Suppl 1:S26-33.

- Sbaffi L, Rowley J. Trust and credibility in web-based health information: a review and agenda for future research. J Med Internet Res. 2017;19(6):e218.

- Vega LC, Montague E, Dehart T. Trust between patients and health websites: a review of the literature and derived outcomes from empirical studies. Health Technol (Berl). 2011;1(2-4):71-80.

Citation

SA R, RA D, D F, CR H, JM FMS, MF B, RJ K. RTAnswers online patient education materials deviate from recommended reading levels. Appl Radiat Oncol. 2018;(2):26-30.

June 19, 2018